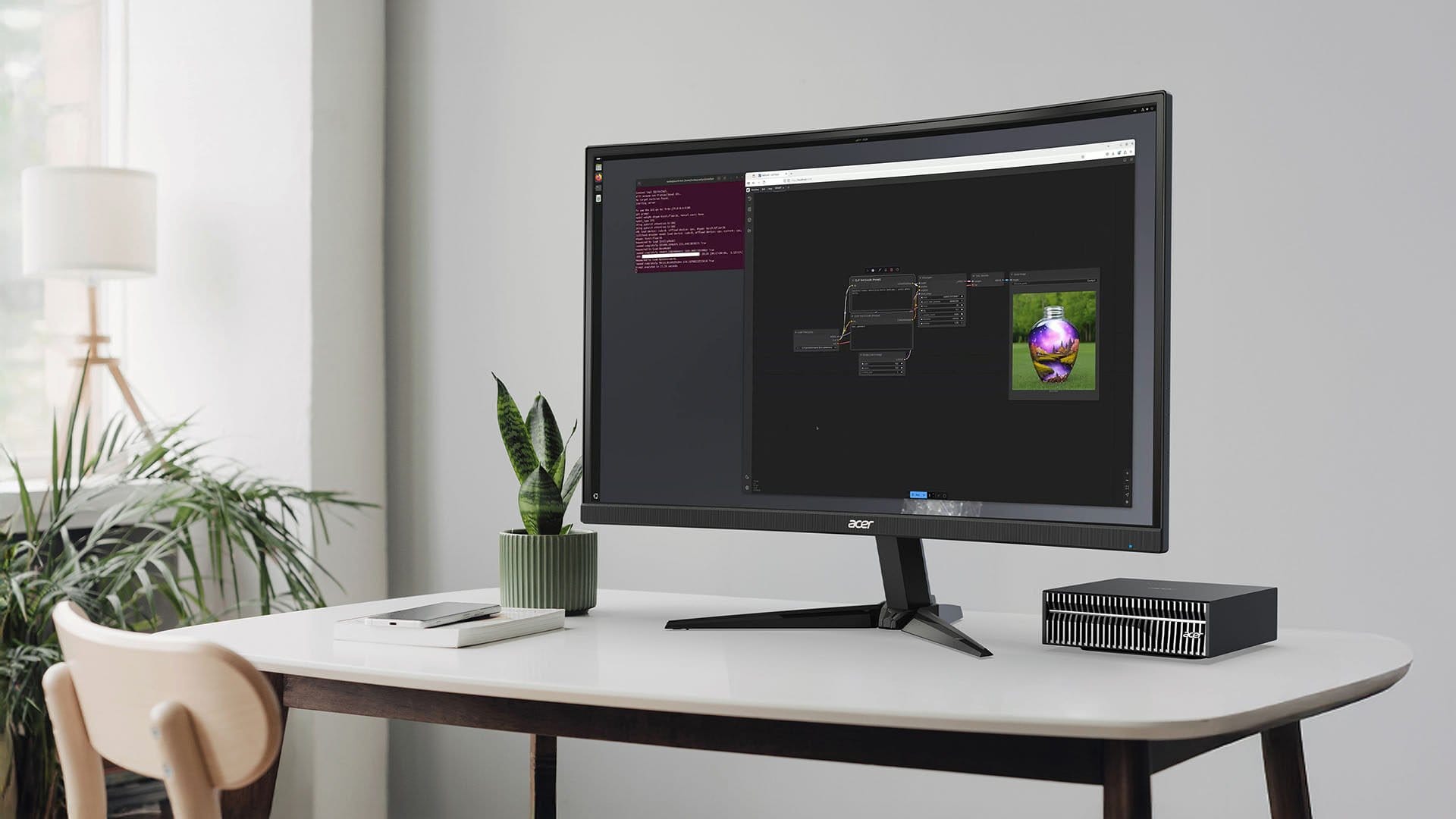

Acer has a new small desktop made for local AI work. The Veriton GN100 uses NVIDIA’s GB10 Grace Blackwell Superchip, packs 128GB unified memory and up to 4TB NVMe storage, and pushes up to 1 PFLOPS of FP4 AI performance. It runs the NVIDIA AI software stack out of the box and supports common tools like PyTorch, Jupyter and Ollama. For the UAE, the EMEA starting price of USD 3,999 works out to roughly AED 14,700 before VAT. Availability and final specs will depend on region.

GB10 power in a 150 mm cube

The headline is the silicon. GB10 Grace Blackwell brings GPU and Arm CPU cores together with unified memory.

- NVIDIA GB10 Grace Blackwell Superchip with next-gen CUDA and 5th-gen Tensor Cores

- 20 Arm-based CPU cores

- Up to 1 PFLOPS (FP4) AI performance

- 128GB LPDDR5x coherent unified memory

- Up to 4TB self-encrypting NVMe M.2 SSD

In practice, this means you can fine-tune or serve large models on-prem without renting cloud time. Unified memory helps when you’re working with token buffers or context windows that don’t fit split pools. If you’re following NVIDIA’s Blackwell roadmap and DLSS 4/RTX updates, our Gamescom wrap gives broader context on what Blackwell class hardware enables for creators and developers.

Built for local LLMs, scalable when you need more

Acer ships the GN100 with DGX Base OS and the NVIDIA AI stack, so common workflows are ready on day one.

- Pre-installed NVIDIA AI software stack

- Works with PyTorch, Jupyter and Ollama

- Local execution for data privacy

- Scale-out via NVIDIA ConnectX-7 SmartNIC

Run Llama-style models and embeddings locally, test prompts, and avoid sending data to external clouds. When your workload grows, link two GN100 units over ConnectX-7 to handle models up to 405B parameters. If you’re sizing up an AI laptop as a companion device, we’ve covered the UAE launch of the Predator Helios 18P AI and what its RTX 5090 can do for training and inference on the go.

Ports, wireless and security in a small chassis

Despite the size—just 150 × 150 × 50.5 mm and under 1.5 kg—you get the basics you’d expect from a workstation.

- Wi-Fi 7 and Bluetooth 5.1+

- 4× USB 3.2 Type-C, HDMI 2.1b, RJ-45 Ethernet

- ConnectX-7 SmartNIC for high-speed fabric

- Kensington lock support

Wi-Fi 7 helps when you’re moving big datasets across a fast home or office network. If you’re weighing up Wi-Fi 7 benefits in the GCC, our guide explains when it’s worth it and how to get real gains at home or in a studio. For fixed-line options that can keep up with multi-gigabit transfers, see our UAE broadband comparison.

UAE context: price, use cases, and who should care

Acer says EMEA pricing starts at USD 3,999 (about AED 14,700 before VAT). That puts the GN100 into entry server territory, but it’s far smaller and quieter than a rackmount.

- Private datasets that must stay on-prem

- Fast iteration on prompts, RAG pipelines and quantised LLMs

- Classroom or lab deployments for AI courses

- Studios building local tools for audio, video and image workflows

If your workload is more general content creation or photo work, a high-spec laptop might be enough. Our laptop buying advice for creators breaks down the trade-offs and what hardware matters for AI-assisted editing. If you’re eyeing an Acer ecosystem, check the Swift Air 16 for a Copilot+ travel machine and Acer’s Predator Orion towers for classic GPU power at your desk.

Specs at a glance

Here’s the short list you’ll care about.

- Model: Acer Veriton GN100 AI Mini Workstation

- OS: DGX Base OS + NVIDIA AI stack

- Processor: 20-core Arm-based; GB10 GB Superchip

- Graphics: NVIDIA GB10 Grace Blackwell

- Memory: 128GB LPDDR5x unified

- Storage: Up to 4TB NVMe (self-encrypting)

- Ports: 4× USB-C (USB 3.2), HDMI 2.1b, RJ-45, ConnectX-7 NIC

- Networking: Wi-Fi 7, Bluetooth 5.1+

- Security: Kensington lock, local model execution

- Size/weight: 150 × 150 × 50.5 mm; <1.5 kg

- EMEA price: from USD 3,999 (about AED 14.7k)

The package aims to reduce cloud spend and latency, while keeping data private. For the UAE, check with Acer’s local channel for final specs and availability.

Can the GN100 run LLMs fully offline?Yes. With 128GB unified memory and the GB10 Superchip, you can run quantised LLMs and vision models locally. The pre-installed NVIDIA AI stack plus tools like Ollama make setup easier.

How does it scale beyond a single box?Two GN100 units can be linked using the NVIDIA ConnectX-7 SmartNIC to handle models up to 405B parameters, with high-speed interconnect for distributed workloads.

Is Wi-Fi 7 necessary for this workstation?Not required, but useful if you push multi-gig file transfers or stream high-res datasets across your LAN. A wired connection is still best for training runs.

Subscribe to our newsletter to get the latest updates and news

Member discussion