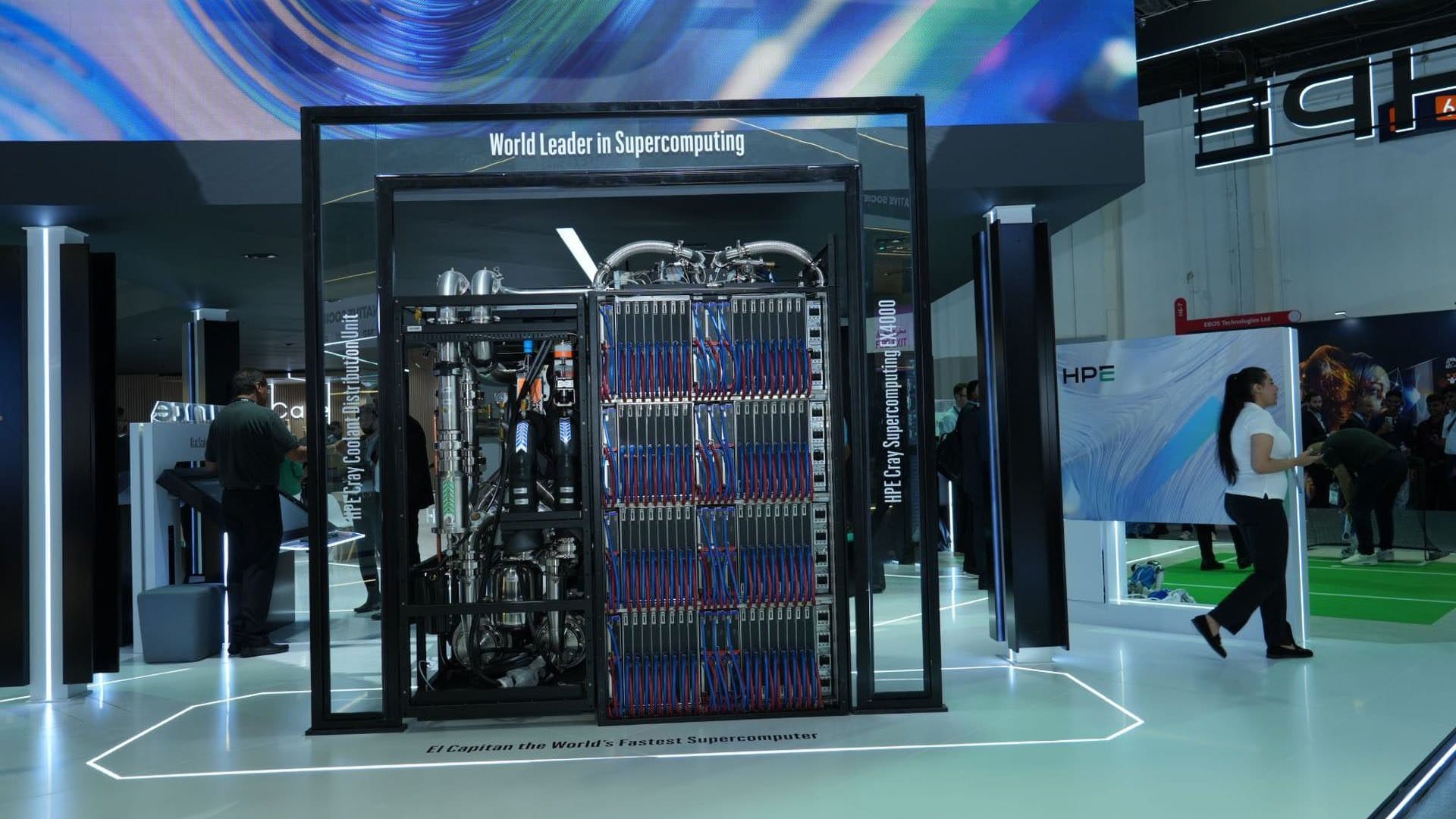

HPE is showing its Cray supercomputing kit at GITEX 2025 in Dubai, built around a 100% fanless direct liquid cooling (DLC) design. It’s the same approach used in El Capitan, the current world’s fastest supercomputer. The pitch is simple: cooler racks, less power for cooling, tighter footprints, and lower noise—useful if you’re building big AI clusters in the Gulf.

What HPE is actually showing

Cray systems plus a fully fanless DLC architecture that routes heat away at the blade and rack level.

- Cray EX architecture with DLC across hot components

- No fans in the server blades; heat is moved by liquid loops

- Designed for dense AI/accelerator nodes

- Cuts cooling energy and halves floor space needs (vs air-cooled/hybrid)

HPE’s 100% fanless DLC removes server-level fans and uses cold plates and coolant loops to take heat straight out of CPUs, GPUs, and other components. The company says this lowers cooling power per blade and enables higher rack density. In practice, that means fewer aisles and less noise, which matters when Dubai data halls are stuffed with GPU trays. HPE claims systems using this design consume roughly half the floor space compared to traditional approaches.

Why it matters for AI in the UAE

AI accelerators sip less power than last year per FLOP—but total draw keeps rising. Cooling is the new bottleneck.

- Next-gen accelerators raise rack power densities

- Air cooling struggles past certain heat flux levels

- DLC improves PUE and makes high-density UAE builds realistic

- Fits the region’s push for greener, quieter facilities

Even with better efficiency per chip, training and serving LLMs pushes racks well beyond comfortable air-cooling limits. Direct liquid cooling brings the heat transfer closer to the sources and trims fan power. That helps local operators hit energy targets without throttling GPUs—or overbuilding white-space. It also aligns with regional goals to cut data-centre emissions as AI demand climbs.

The El Capitan link

The same Cray DNA runs El Capitan—the current TOP500 #1—showing the cooling and system design scales.

- El Capitan uses HPE Cray Shasta/EX technology

- Currently ranked #1 on TOP500 (June 2025 list)

- HPE features prominently in the Green500 rankings too

El Capitan, at Lawrence Livermore, sits at the top of the TOP500 as of June 2025. It’s built on HPE Cray architecture, underscoring that the platform—and its liquid-cooling strategy—scales to exascale. HPE also points out strong showings on the Green500, which ranks systems by performance per watt, reflecting the same focus on efficient cooling and interconnects.

What the fanless DLC claims look like in numbers

HPE’s public figures spell out the benefits.

- 37% reduction in cooling power per server blade vs hybrid DLC alone

- Lower utility costs, less carbon, less noise

- Higher cabinet density; about half the floor space required

In HPE’s materials, moving to a fully fanless DLC design trims cooling overhead per blade by 37% compared to hybrid DLC, while also enabling denser cabinets. That translates into meaningful OPEX reductions in the UAE, where power and chilled water costs for AI halls add up fast. Facilities can squeeze more throughput into the same footprint and keep acoustic levels sensible for on-floor work.

The Green500 angle

Efficiency isn’t just talk—HPE-built machines show up near the top.

- Multiple HPE Cray systems feature in June 2025’s Green500

- Examples include Adastra 2, Isambard-AI, Tuolumne, and others

- Focus is performance per watt, not just peak FLOPS

Look at the June 2025 Green500: HPE Cray systems like Adastra 2 and Isambard-AI rank highly on efficiency. That doesn’t prove every HPE build will be the most frugal, but it supports the claim that the company’s cooling and fabric choices help squeeze more work out of each watt—vital when GPUs run near the edge of rack power limits.

Is El Capitan still the world’s fastest supercomputer?As of June 2025’s TOP500, yes—El Capitan holds #1. Lists update twice a year.

What does “100% fanless” mean here?Server blades remove onboard fans; liquid carries heat away via cold plates and a rack-level loop. Facility fans may still exist, but server-level fans are eliminated.

How much power does the fanless DLC save?HPE cites a 37% reduction in cooling power per blade versus hybrid DLC, plus gains from higher rack density. Actual savings depend on your site.

Subscribe to our newsletter to get the latest updates and news

Member discussion