At the Snapdragon Summit 2025, Steven Bathiche of Microsoft explained how Qualcomm’s Snapdragon NPU is reshaping the future of computing. By combining domain-specific silicon with new software techniques, Bathiche showed how on-device AI is crossing thresholds once thought impossible. For businesses and developers, the result is sustained performance, efficient power use, and new classes of user interaction.

The New Baseline: High Compute, Low Power

Using Qualcomm processors, Windows devices can now run continuous AI workloads without burning through battery. The formula: deliver massive compute while preserving efficiency.

Copilot Plus PCs average 1.4 trillion monthly inference steps… This enables sustained intelligence running in the background, all without eating your battery.

Why NPUs Matter

Unlike general-purpose processors, NPUs are optimized for tensor operations. This design accelerates neural network inference while reducing power draw.

NPUs are wholly optimized to operate on tensors… a dedicated neural processor unit enables a level of efficiency and speed like none other.

Software Side: Smaller Models, Same Quality

Hardware advances pair with model compression techniques such as quantization. These shrink models so they can run faster and leaner at the edge.

- Frontier models start with large floating-point weights.

- Quantization compresses those to 2-bit values.

- Models become drastically smaller without losing accuracy.

Using special techniques to compress those numbers down to two-bit representation… we’re able to make models drastically smaller, all without losing quality.

Prototype Spotlight: Two-Bit Phi Silica

Microsoft’s prototype, Phi Silica, demonstrates this principle. The 2-bit version runs with half the memory footprint while maintaining output quality.

This is a two-bit version of Phi Silica… utilizing the NPU more effectively with half the memory footprint and faster.

From 4B To 14B: On-Device Reasoning

Last year’s milestone was a 4B-parameter model running locally. Today, Snapdragon NPUs support 14B-parameter models. More important than scale, Bathiche emphasized reasoning ability.

- 14B parameters, tuned for chain-of-thought reasoning.

- Runs fully on battery.

- Competitive with leading cloud-based benchmarks.

Here, we just released a 14 billion parameter model running on the Snapdragon NPU… a thinking model… agent-like, all running on battery.

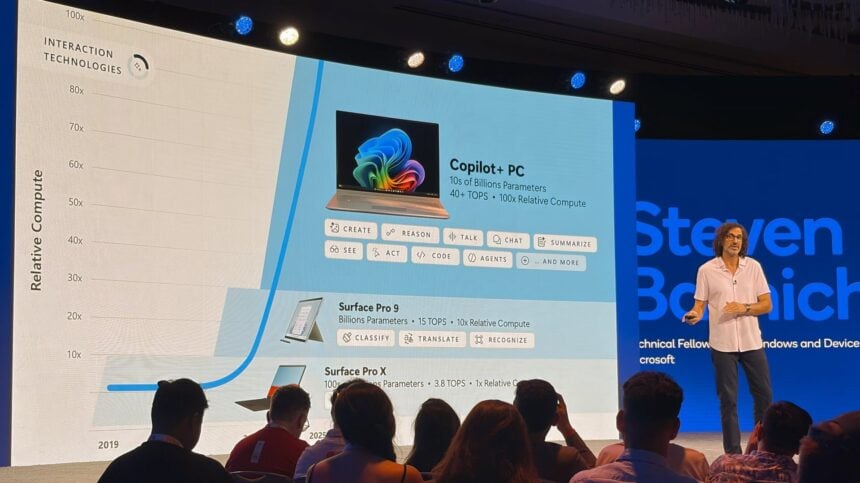

The Pattern: Compute Jumps Drive New UX

History shows each compute breakthrough brings new classes of user experience. Bathiche drew a direct line from mechanical computing to today’s agent-based AI.

You start seeing a correlation from compute to experience… every step, how complex and more sophisticated you have, from translation to reasoning.

Cloud + Edge: One System

For Microsoft, the future is hybrid. Devices and cloud infrastructure operate as one system, balancing workloads for speed, security, and context.

- Cloud orchestrates; device executes.

- Locality reduces latency and risk.

- Developers can target both without compromise.

The next computer is not any single thing… It’s the cloud working with your local device to deliver next-generation computing, working as one.

What This Means For You

- Build for on-device AI to deliver real-time, private experiences.

- Train and deploy models with quantization in mind.

- Design agent workflows that anticipate user intent.

- Treat always-on AI as standard, not optional.

- Architect applications for seamless cloud-edge integration.

Steven Bathiche’s remarks at the Snapdragon Summit 2025 underscored a clear message: the leap in compute power driven by Snapdragon NPUs is not just an incremental upgrade, but a structural shift in how PCs are built and used.

With efficient on-device AI, compressed models, and hybrid cloud–edge orchestration, Copilot PCs are positioned to move beyond productivity tools and become intelligent partners. For businesses and developers, this moment signals the need to adapt strategies now—because the next generation of computing is no longer on the horizon, it’s here.